Testing AI models is a must to ensure reliability, performance, and also security. Learn proven testing strategies, methods, and tools for building trustworthy AI applications. Discover How to Test AI Models effectively for consistent results.

Artificial intelligence (AI) powers growth across industries. Its reliability depends on how effectively we test AI models. Unlike traditional software testing, AI model testing needs a broader set of techniques to validate performance and adaptability. The testing process makes sure that an AI system performs consistently in different conditions. Testing AI models is an essential step in the development of high-quality AI applications, and also understanding how to test AI Models is key to building reliable systems.

Why is AI Testing a Must?

AI testing is more than checking for errors. It’s about verifying that a machine learning model behaves as expected in real world scenarios. Developers must validate results in different use cases, predictions with the intended purpose. Model testing also exposes hidden weaknesses like bias in AI, adversarial vulnerabilities, or reduced accuracy under stress.

Since AI applications integrate with other digital systems, integration testing becomes vital. The ability to evaluate how an AI model interacts with surrounding services determines overall system resilience. Ultimately, testing is essential to guarantee dependable artificial intelligence solutions.

Core Testing Strategies for AI

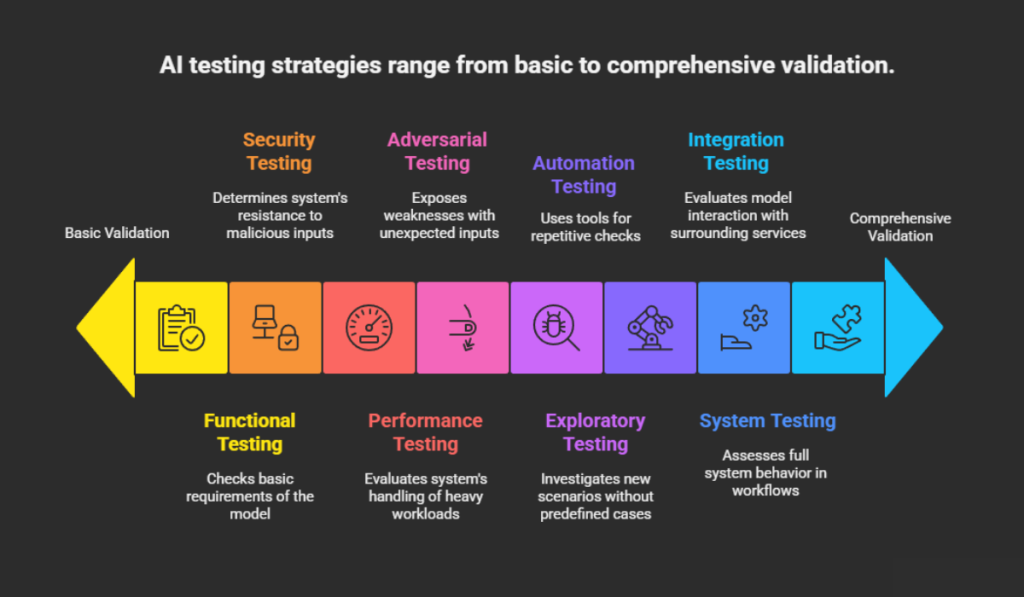

The nature of AI applications demands specialized testing strategies. Functional testing ensures a model has basic requirements, additional layers, like security testing and performance testing determine if systems can fight malicious inputs or heavy workloads. Some of the most important strategies are,

- Adversarial testing, exposing weaknesses by feeding unexpected or manipulated inputs.

- Exploratory testing, investigating new scenarios without predefined test cases.

- Automated testing, using testing tools and also scripts for repetitive checks.

- System testing, evaluates how the full AI system behaves when integrated into larger workflows.

Testing Methods for Machine Learning Models

AI models change with new data. This dynamic behavior requires testing methodologies. Teams frequently do test cases to simulate user interactions, compare outputs with expected values and also track deviations.

Important testing methods like,

- Box testing (white box and black box) to analyze structure and also performance.

- Functional testing.

- Stress testing.

- Integration testing.

These methods help organizations build reliable AI.

Challenges in Testing AI Systems

AI systems introduce challenges not found in traditional testing. Models are opaque, difficult to interpret results. The nature of AI applications requires ongoing oversight through comprehensive testing and also thorough testing strategies. Issues such as ethical AI and transparency are highlightable things.

Rigorous testing is a must to maintain trust in intelligent technologies. Without a structured testing approach, the most advanced language models or AI agents can deliver unreliable results.

Future Trends & Advanced Methods for Testing Generative AI Systems and AI Applications

The evolution of generative AI has introduced new challenges in model testing. analyzing creativity and also relevance of outputs.

Testing generative AI means verifying that outputs are not only accurate but also meaningful, ethical and safe. Since large language models and image generators create new content, rigorous testing becomes crucial. Common practices like,

- Exploratory testing

- Adversarial testing to challenge outputs with biased or misleading prompts.

- Functional testing to check generated content aligns with the application’s purpose.

- Automation testing

Developers can ensure testing helps eliminate errors for trustworthy results.

AI Agents and Application Testing

As AI agents gain popularity in industries, validating their behavior is a must. Testing these agents has integration testing with broader systems, communication with users, and also external tools. A testing platform should simulate real-world workflows, teams to observe adaptability.

When evaluating an AI app, developers must check for decision making accuracy, responsiveness, and ethical boundaries. System testing verifies the complete lifecycle of agent operations. This comprehensive testing approach ensures reliable AI performance.

Frameworks, Tools, and Monitoring Programs

| Category | What it’s for | Good fits | Notable examples |

| ML testing & data quality | pre/post-training checks, data validation, model tests, drift reports | DS/ML teams needing automated checks and visual reports | Deepchecks — data & model tests (classification/regression/LLM) Great Expectations (GX) — data quality validation (“Expectations”) Evidently, AI — drift detection, reports, and monitoring dashboards Alibi Detect (Seldon) — drift, outlier & adversarial detection GitHubfuzzylabs.aiDeepchecks |

| Experiment tracking & lifecycle | track runs/metrics/params, compare experiments, lineage, model registry | Any ML workflow | MLflow, Weights & Biases (W&B) Markaicode |

| Model monitoring / ML observability | Deepchecks — data & model tests (classification/regression/LLM) Great Expectations (GX) — data quality validation (“Expectations”) Evidently AI — drift detection, reports, and monitoring dashboards Alibi Detect (Seldon) — drift, outlier & adversarial detection GitHubfuzzylabs.aiDeepchecks | MLOps/Platform teams | WhyLabs Arize AI Fiddler Superwise NannyML — post-deployment performance estimation without labels mlopscrew.comctipath.com |

| General observability & APM (useful for AI apps) | Infra/app logs, metrics, traces, alerting, anomaly detection | Azure AI Foundry Observability, similar features exist across major clouds, Microsoft Learn | Datadog Dynatrace New Relic Grafana / Prometheus LogicMonitor TechRadar+2TechRadar+2Grafana Labs |

| Cloud AI monitoring (GenAI & LLM apps) | safety/performance, prompt/response logging, evals | teams building LLM/agentic apps | Production drift, data quality, latency, incidents, real-time dashboards |

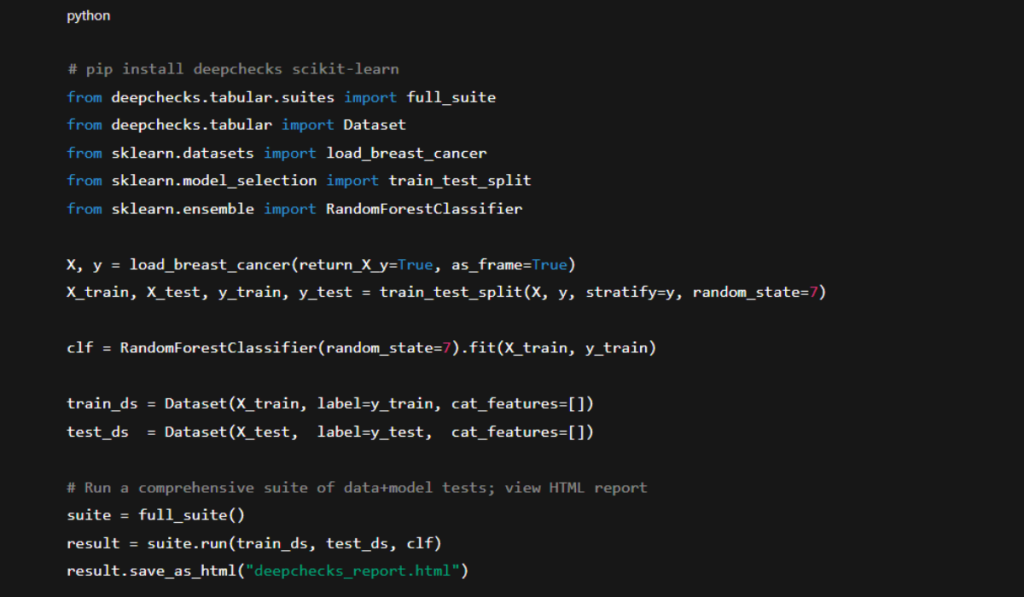

1) Deepchecks — run data & model checks (Python)

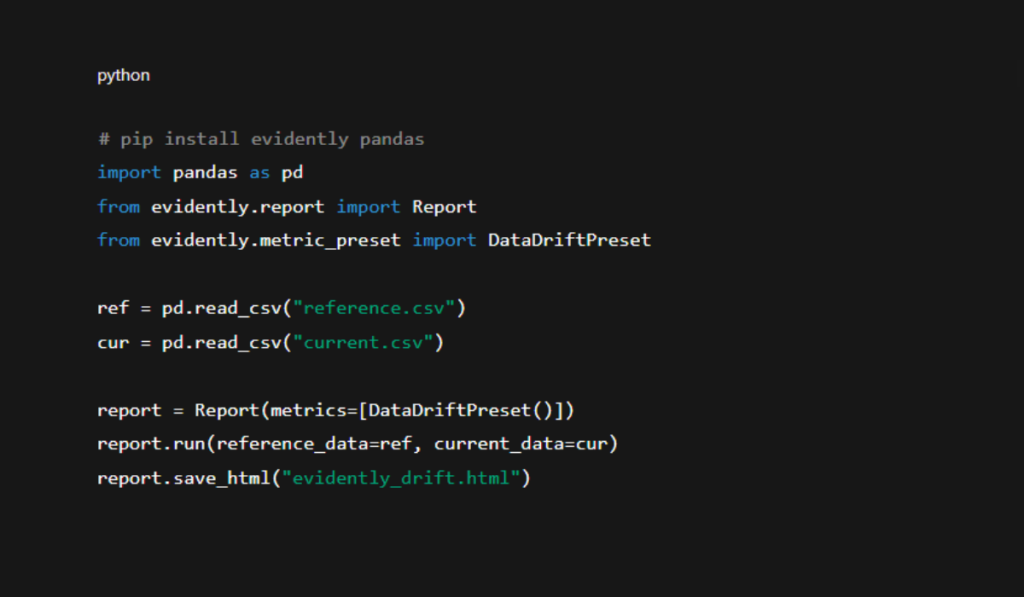

2) Evidently — generate a drift report for a reference vs. the current dataset

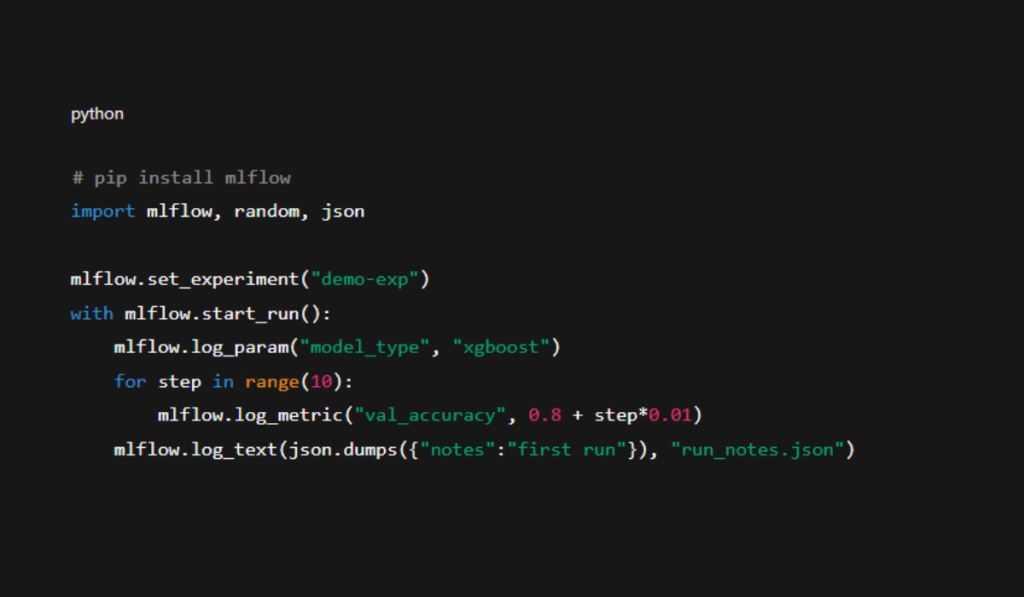

3) MLflow — log params/metrics/artifacts for each training run

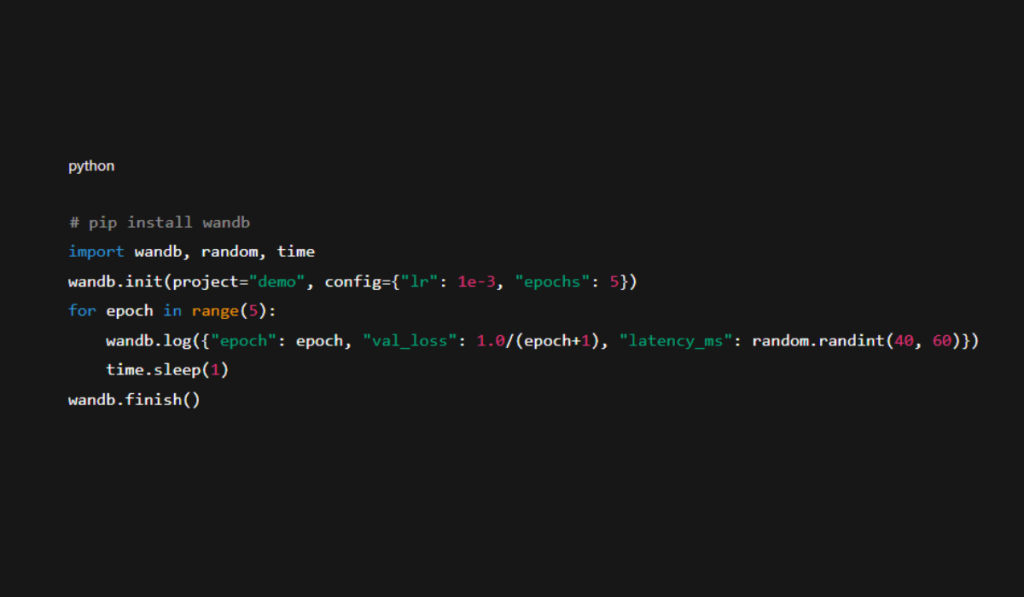

4) W&B — track experiments and basic model monitoring

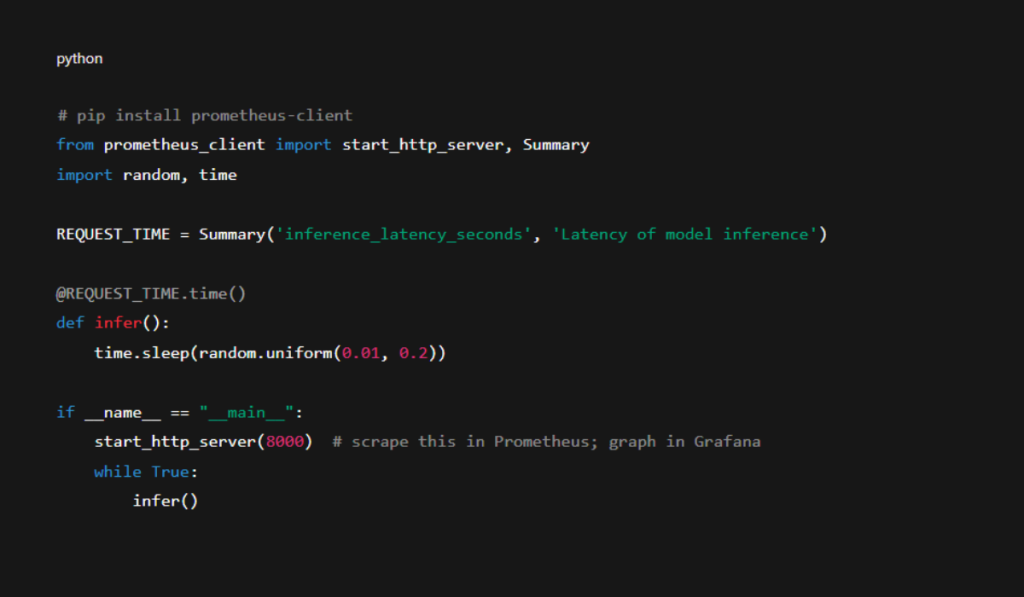

5) Prometheus + Grafana — expose custom app/model metrics and visualize

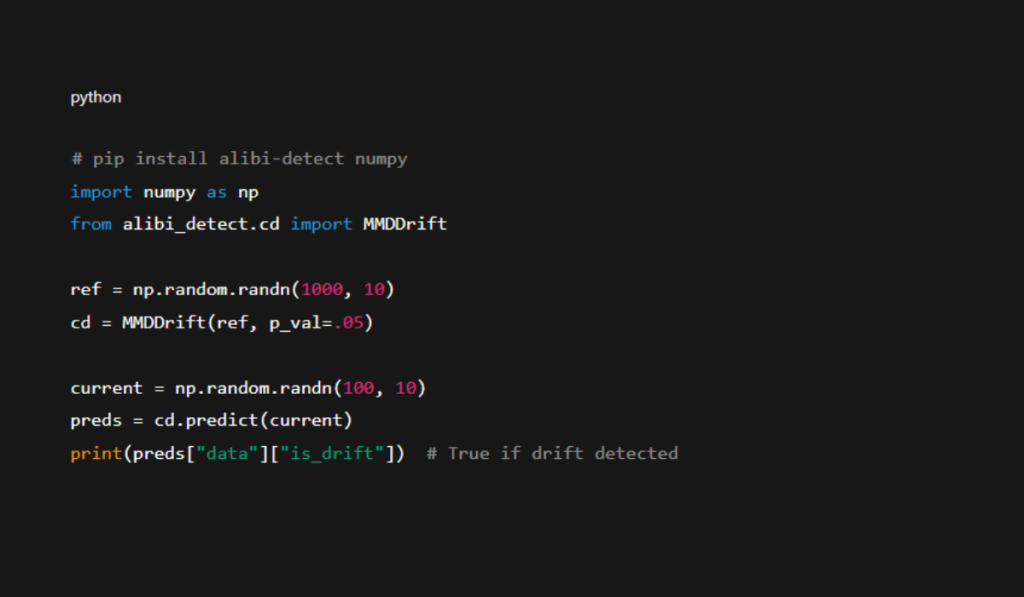

6) Alibi Detect — deploy drift/anomaly detection in production

Ethical AI and Reliability Considerations

Building ethical AI requires more than just technical evaluation. Testing must address bias in AI models, data fairness, and transparency in decision making. A structured process helps ensure that AI behaves responsibly.

Thorough testing frameworks ensure that outputs are unbiased and inclusive. This is especially true for healthcare, finance, and legal systems, where the reliability of AI models directly impacts human lives.

Conclusion

Testing is essential to ensure the success of modern AI. From validating language models to securing AI-based applications, organizations must adopt comprehensive testing methodologies. A balanced testing approach that integrates automated testing, exploratory testing, stress testing, and also functional testing will build a reliable AI capable of meeting real-world demands. Read our other blogs to get more valuable information.

FAQs

Why is AI model testing important?

AI model testing ensures reliability and performance. It validates that models deliver accurate results, work under stress, and also use smoothly with other systems, reducing risks in real-world applications.

What are common AI testing strategies?

Key strategies like adversarial testing, exploratory testing, automation testing, and also system testing. These approaches help share hidden weaknesses, scalability, and validate AI performance.

What challenges exist in testing AI systems?

AI testing is complex due to opaque models, evolving behavior, and bias risks. Continuous monitoring is a must to maintain trust and reliability in AI applications.